Overview

This project explores the realm of image morphing, specifically face morphing. To morph one image to another, we must first select correspondence points on both images that correspond to the same feature. Then by using the Delaunay traingulation algorithm, we can create a triangle mesh over our images, which we will use to perform our warping, as it is a change of basis between these triangles. Then, we can cross dissolve the pixel values of our warped images to create an intermediate image. These techniques were used to create a facial morphing animation, determine the mean face of a popluation, and extrapolate from the mean to create caricatures.

Defining Correspondences

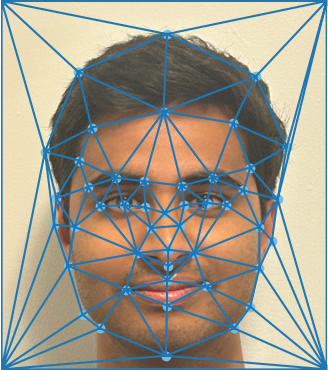

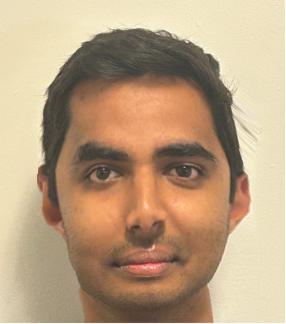

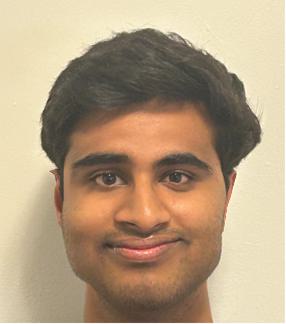

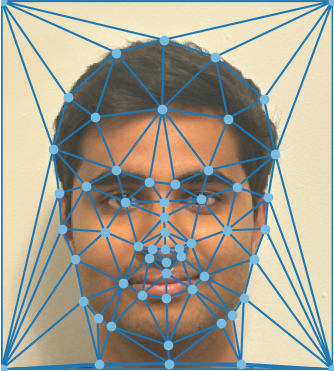

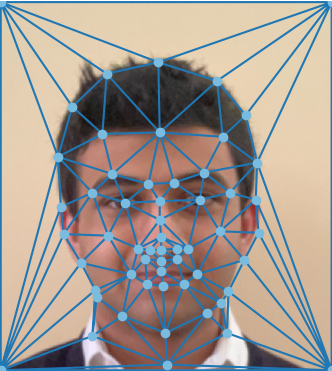

For this project, I decided to create a morphing animation from my face to my roommate Suchir's face. The first step in creating a facial morphing animation is to define correspondences between the two images. So I first cropped images of Suchir and me to be roughly the same size. Then using an online tool, I selected 53 correspondence points on our faces and additionally added the four corners of the image to create a total of 57 correspondences to perform the morphing. Then I performed the Delaunay triangluation algorithm on the average of the points selected in both images. The triangulation was performed on the average of the points to minimize the warping on the triangles.

|

|

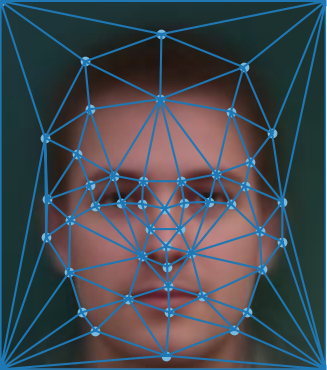

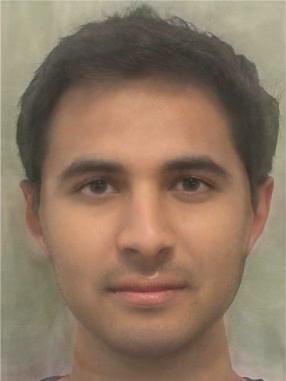

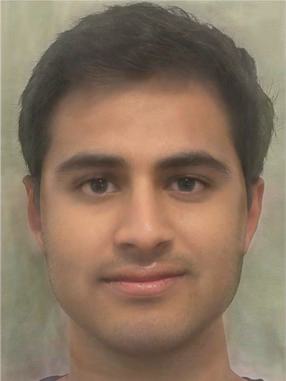

Computing the "Mid-Way Face"

To compute the "mid-way face" between Suchir and me, I warped both of our faces to the average geometry and then cross dissolved the pixel colors. The cross dissolving of pixel colors was quite straight-forward, simply computing \(\frac{1}{2}\) (avg_im1 + avg_im2). However, the image warping was more involved. Given the triangular mesh created by the Delaunay triangulation, I computed a change of basis between triangles. The change of basis was computed in the following manner: $$p_{im1} = T_1T_2^{-1} (p_{im2} - og_2) + og_1$$ where \(T_1\) is the transformation from a triangle in the original image to the standard basis, \(T_2\) is the change of basis matrix from the corresponding triangle in the warped image to the standard basis, and \(og_1\) and \(og_2\) are the origins of the original and warped images, respectively. Note however, that \(p_{im1}\) is not guaranteed to be a discrete pixel value. To account for this, I performed a linear interpolation with pixels neighboring \(p_{im1}\), to determine the appropriate color value of pixel \(p_{im2}\).

|

|

|

|

|

The Morph Sequence

Using the techinque explained above, I created a morphing animation from my to Suchir's face with a total of 45 frames. The geometry used was computed by a weighted average of the correspondence points of the original images. For frame i: ((num_frames - 1 - i) * im1_pts + i * im2_pts) / (num_frames - 1). The frames were then put together into a gif that runs at 30 fps.